The Eyes of the Machine: Why the Transition from LLMs to VLMs is the True Medical Revolution

Thoughts on the Convergence of Biological Intelligence and Artificial Perception

By Dr. Stefano Sinicropi, MD Founder, The HyperCharge Human Engineering Lab

Disclaimer: This blog is for informational purposes only and does not constitute medical advice. The content is not intended to be a substitute for professional medical consultation, diagnosis, or treatment. Always seek the advice of your physician or other qualified health provider with any questions you may have regarding a medical condition.

EXECUTIVE SUMMARY

We are living through the most significant technological transition since the invention of the microscope. For the last two years, the world has been captivated by Large Language Models (LLMs)—Artificial Intelligence that can read, write, and pass the Medical Board Exams.

While impressive, LLMs have a fatal flaw: They are blind. They operate on text, but biology operates on physics, geometry, and light. An LLM can read a textbook about cancer, but it cannot see the subtle asymmetry of a tumor cell.

The next phase of AI—Vision Language Models (VLMs)—fixes this. By giving AI the power of sight, we are moving from an era of "Diagnostic Guesswork" to an era of "Perceptual Certainty." This white paper explores how VLMs will revolutionize Radiology, Neurology, Surgery, and Oncology, and why this shift represents the beginning of the Technological Singularity in Medicine.

THE BLIND ALIEN

In his seminal work The Age of AI, Henry Kissinger makes a chilling observation: Artificial Intelligence is not just a tool; it is an "Alien Intelligence." It perceives reality in dimensions that human biology cannot access. It finds antibiotics like Halicin by seeing molecular patterns that no human chemist could ever deduce [5].

But for the last two years, this "Alien" has been blind.

We have marveled at Large Language Models (LLMs) like ChatGPT. We celebrated when they passed the US Medical Licensing Exam (USMLE). We cheered when Dr. Robert Pearl, former CEO of Kaiser Permanente, wrote ChatGPT MD, predicting that Generative AI would democratize medical knowledge and break the monopoly of the "Sick Care" system [1].

But there was a fatal flaw. LLMs operate on Text. Medicine does not happen in text. Medicine happens in the physical, visual world.

A dermatologist looks at a mole to determine if it is melanoma.

A radiologist looks at a shadow on an X-ray to determine if it is a fracture.

A spine surgeon looks at the micro-motion of a vertebrae to determine instability.

A neurologist looks at the asymmetry of a gait to diagnose Parkinson's.

An LLM can read 10 million medical journals, but it cannot see your patient. It relies on a human to describe the problem—and humans are notoriously unreliable narrators. If the human misses the visual cue, the AI misses the diagnosis.

The revolution has finally arrived. We are transitioning from LLMs (Text) to VLMs (Vision Language Models). We are giving the Alien eyes. This isn't just a software update; it is the end of "Diagnostic Guesswork." It is the moment where the machine moves from being a Librarian (who reads about medicine) to a Clinician (who practices it).

THE TECHNOLOGY: FROM "READING" TO "PERCEIVING"

Why Pixels are more powerful than Words.

To understand the magnitude of this shift, you must understand the limitation of language itself. As Ray Kurzweil argues in The Singularity Is Nearer (2024), information technology eventually merges with biology. But language is a Lossy Compression Algorithm.

When a radiologist looks at a complex MRI of a lumbar spine, they see thousands of data points: the hydration of the disc, the calcification of the facet joint, the inflammation of the nerve root. But when they write their report, they compress that 3D reality into three words: "Mild Degenerative Changes." Massive amounts of data are lost in that translation. The nuance is gone. The texture is gone.

The VLM Shift: A Vision Language Model (like GPT-4V or LLaVA-Med) connects Pixels to Concepts. It doesn't read the report; it looks at the raw data. It processes the image pixel-by-pixel, detecting patterns that the human eye—limited by evolution—simply cannot see.

It sees the texture of the bone marrow (Osteoporosis) that the radiologist's eye glossed over because they were looking for a fracture.

It sees the subtle inflammation in the capillaries of the retina that predicts Alzheimer's 10 years early.

It sees the protein misfolding that drives cancer.

As Kissinger argued, AI processes data without the cognitive bias of human evolution. Humans evolved to see tigers and berries. We did not evolve to see cancer cells or micro-fractures. VLMs have no evolutionary blind spots. They see everything.

THE IMPACT ON SPECIALTIES: BEYOND THE LAB

How Visual AI upgrades the fundamental pillars of Human Engineering.

RADIOLOGY: THE END OF THE "GRAYSCALE GUESS"

Standard radiology is subjective. A radiologist looks at a grayscale X-ray and makes a judgment call based on their training, their fatigue level, and the quality of their coffee that morning. The VLM Application: The AI analyzes the Texture and Micro-Architecture of the bone. It quantifies the density of the trabecular meshwork (the scaffolding inside the bone).

The Sinicropi Logic: This is why we use REMS (Echolight) technology at The Institute. Standard DEXA only measures density (how much bone). REMS uses radiofrequency spectrometry to "see" the fragility of the bone matrix. A VLM can instantly correlate that fragility score with 50 years of global fracture data to predict—and prevent—the break before it happens [2].

PSYCHIATRY: THE DIGITAL PHENOTYPE

Psychiatry has historically relied on subjective reporting. The doctor asks, "How do you feel?" and the patient answers. This is flawed because patients often mask their true state, or they lack the vocabulary to describe their despair. The VLM Shift: Mental health disorders have physical signatures.

Micro-Expressions: VLMs can analyze facial micro-expressions (flattened affect, delayed response, asymmetrical smiling) to diagnose depression with higher accuracy than clinical interviews [9].

Digital Phenotyping: By analyzing speech patterns, movement, and eye contact in video, VLMs can detect the early onset of Schizophrenia or PTSD before a crisis occurs.

The Sinicropi Take: We are moving from "Listening" to "Measuring." This validates our use of BrainView (qEEG) and EyeBox—we use technology to see the electrical failure of the brain. We aren't treating "feelings"; we are treating "circuits."

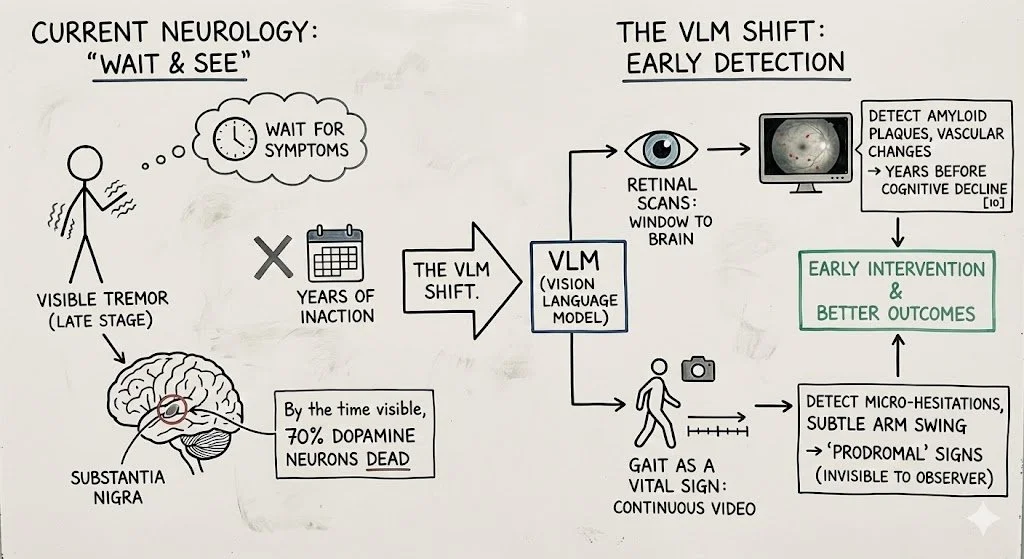

NEUROLOGY: THE INVISIBLE TREMOR

Neurology is often a "wait and see" discipline. We wait for the tremor to become visible to the naked eye. By the time a Parkinson's tremor is visible, 70% of the dopamine neurons in the Substantia Nigra are already dead. The VLM Shift:

Retinal Scans: The eye is a window to the brain. VLMs scanning retinal images can detect amyloid plaques (Alzheimer's) and vascular changes years before cognitive decline begins [10].

Gait as a Vital Sign: Continuous video monitoring can detect the "prodromal" (early) motor signs of Parkinson's—micro-hesitations in gait, subtle changes in arm swing—that are invisible to a human observer.

SURGERY: THE AUGMENTED EYE

As a surgeon, I rely on my eyes and my hands. But my eyes are limited to the visible spectrum. I cannot see blood flow inside a tissue. I cannot see a nerve buried in muscle. The VLM Shift: Computer Vision Navigation.

Real-Time Anatomy: VLMs can overlay MRI data onto the patient in real-time (Augmented Reality), allowing the surgeon to "see through" tissue to identify nerves and blood vessels.

Error Prevention: An AI "Co-Pilot" watches the surgery. If I am about to place a screw 1mm too close to a nerve, the VLM calculates the trajectory and flags it instantly. It is the end of "blind spots" [11].

THE ROBOTICS REVOLUTION: FROM ASSISTANCE TO AUTONOMY

How Vision AI gives the Robot a Brain.

Currently, surgical robotics (like the Da Vinci) operate on a "Master-Slave" model. The robot does nothing unless the surgeon moves their hand. It is a fancy tool, but it has no intelligence. The VLM Shift: Vision Language Models are the missing link that allows robots to perceive, understand, and act autonomously.

The "Smart" Suction: Recent research demonstrates VLMs guiding robots to autonomously suction blood during surgery by "seeing" the field and distinguishing between active bleeding and static clots [12].

The Autonomous Stitch: VLMs can analyze tissue texture in real-time to adjust the tension of a suture, preventing tissue tearing in a way human hands cannot feel.

The Future: We are moving toward Level 4 Autonomy—where the robot identifies the tumor margin with microscopic precision and executes the resection faster and cleaner than any human hand. The surgeon becomes the "Pilot," overseeing the flight path, but the AI flies the plane.

ONCOLOGY: THE END OF "CARPET BOMBING"

Integrating CRISPR, Nanobots, and Vision AI.

Oncology is currently a war of attrition. We use chemotherapy to carpet bomb the body, hoping to kill the cancer before we kill the patient. It is crude, toxic, and inefficient. The VLM Shift:

CRISPR Control: AI models are now designing CRISPR edits with zero off-target effects. VLMs "see" the genome in 3D, ensuring the edit happens only where intended.

Nanobot Navigation: As Kurzweil predicted, we are entering the age of Nanomedicine. VLMs will act as the "Air Traffic Control" for swarms of nanobots, guiding them visually through the vascular system to hunt metastatic cells individually.

The Sinicropi Take: This is the ultimate Human Engineering. We are moving from "Chemical Warfare" (Chemo) to "Special Ops" (Targeted Removal).

THE "TRANSCENDENCE" OF HUMAN CAPABILITY

Moving from Replacement to Augmentation.

Faisal Hoque, in Transcend: Unlocking Humanity in the Age of AI, argues that the ultimate goal of AI is not to replace the human, but to Augment our capacity for creativity and empathy [7].

The "Patient CEO" Application: With a VLM on your phone, the "9-Minute Tragedy" of the doctor's visit becomes irrelevant.

The New Workflow: You snap a photo of your meal. The AI doesn't just count calories; it calculates the Inflammatory Load based on the texture of the processed food.

The New Audit: You scan your own eye movement (via phone camera). The AI detects the cranial nerve latency of a concussion.

This fulfills Hoque’s vision: The technology handles the Data Processing, allowing the human (Doctor and Patient) to focus on Strategy and Wisdom.

THE ETHICS OF ENGINEERING: AVOIDING THE DYSTOPIA

Why I am betting on a Benevolent Future.

In his provocative book Scary Smart, Mo Gawdat (former Chief Business Officer at Google X) issues a stark warning: AI is not just code; it is a digital being that learns from its parents. If we teach it greed, polarization, and violence, we will raise a monster that amplifies our worst traits [13]. But Gawdat also offers a solution: "We must raise AI to be benevolent."

As a physician, I see a unique opportunity here. In the medical field, we have a head start. Our fundamental operating system is the Hippocratic Oath: First, Do No Harm.

The Utopian Counter-Argument: Critics fear a future where AI denies care or maximizes profit. That is a risk if we leave AI in the hands of insurance adjusters (The "Willy Sutton" model). But if we put AI in the hands of Healers and Human Engineers, the potential is utopian, not dystopian.

The Abundance of Care: Currently, medical expertise is scarce. It takes 15 years to train a neurosurgeon. An AI trained on every neurosurgical procedure in history can replicate that expertise infinitely. We can deploy "AI Super-Doctors" to rural clinics and developing nations for the cost of electricity.

The End of Suffering: AI doesn't get tired. It doesn't get compassion fatigue. It can monitor a patient in pain 24/7 and adjust their micro-environment to ensure comfort.

Radical Longevity: By solving the "Protein Folding" problem and mastering the genome, AI won't just treat disease; it will likely solve the engineering problem of Aging itself.

My Stance: We must be rigorous with our ethics. We must ensure the "Objective Function" of our medical AI is Patient Vitality, not Corporate Profit. But I refuse to let fear stop us. The moral cost of not using AI—of letting millions die from preventable errors and undetected cancers—is far greater than the risk of using it.

We are building the future. Let’s build it with a conscience.

MY TRANSITION: FROM SURGEON TO FUTURIST

I've spent 20 years as a spine surgeon. and I love the technical precision. But I realized that surgery was often fixing the result of a failure, not the cause.

I founded The HyperCharge Human Engineering Lab because I saw a future where we don't just wait for the body to breakdown. With the advent of VLMs and AI, we now have the tools to see the breakdown coming 10 years in advance.

We can see the metabolic failure.

We can see the structural weakness.

We can see the energetic brownout.

This technology will allow us to move from being a "Mechanic" (who fixes the car after the crash) to an "Engineer" (who redesigns the car so it never crashes).

The Singularity is nearer than you think. And it might save your life.

SCIENTIFIC BIBLIOGRAPHY

Pearl, R. (2024). ChatGPT, MD: How AI-Empowered Patients & Doctors Can Take Back Control of American Medicine. Forefront Books.

Li, Y., et al. (2025). "Vision Language Models in Medicine: A Survey." arXiv preprint arXiv:2503.01863.

Zhang, Y., et al. (2024). "Accurate estimation of biological age and its application in disease prediction using a multimodal image Transformer system." Proceedings of the National Academy of Sciences, 121(2), e2308812120.

Jumper, J., et al. (2021). "Highly accurate protein structure prediction with AlphaFold." Nature, 596(7873), 583-589.

Kissinger, H., et al. (2021). The Age of AI: And Our Human Future. Little, Brown and Company.

Lee, K.F., & Chen, Q. (2021). AI 2041: Ten Visions for Our Future. Currency.

Hoque, F. (2023). Transcend: Unlocking Humanity in the Age of AI. Post Hill Press.

Mitchell, M. (2019). Artificial Intelligence: A Guide for Thinking Humans. Farrar, Straus and Giroux.

Guo, W., et al. (2022). "Facial Expression Analysis for Depression Detection: A Review." IEEE Access.

Poplin, R., et al. (2018). "Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning." Nature Biomedical Engineering.

Hashimoto, D. A., et al. (2018). "Artificial Intelligence in Surgery: Promises and Perils." Annals of Surgery.

Kim, T., et al. (2024). "From Decision to Action in Surgical Autonomy: Multi-Modal Large Language Models for Robot-Assisted Blood Suction." arXiv preprint arXiv:2408.07806.

Gawdat, M. (2021). Scary Smart: The Future of Artificial Intelligence and How You Can Save Our World. Bluebird.

About the Author

Dr. Stefano Sinicropi, MD is a Board-Certified Orthopedic Spine Surgeon and a pioneer in the emerging field of Human Engineering. Trained at Columbia University, he spent two decades operating at the highest levels of the traditional medical system, performing thousands of complex spinal surgeries. This deep "insider" experience revealed a critical flaw in modern medicine: the system is designed for crisis management ("Sick Care") rather than biological optimization. Refusing to accept the decline of his patients as inevitable, Dr. Sinicropi pivoted from solely treating structural failure to engineering the cellular "hardware" that prevents it.

As the Founder of The HyperCharge Human Engineering Lab and The Institute for Regenerative Medicine, Dr. Sinicropi bridges the gap between elite surgical precision and advanced biophysics. His proprietary protocols utilize Photobiomodulation, Oxygen dynamics, and Regenerative Medicine to treat the root cause of chronic disease—energy failure—rather than just masking symptoms with chemistry. He is the author of Wellness at the Speed of Light and a globally recognized thought leader dedicated to helping patients stop being passive passengers and become the CEO of their own health.